Test against what won't change

You should only have to change your tests when you change what your system does, not how it does it.

When I started to code professionally, the idea that programmers should test their own code was still a bit radical - management saw it as roughly akin to trusting a drug smuggler to fill out their own customs form at the airport. Personally I found this pretty frustrating, because I (a green programmer who knew everything) had read what all the smart people were saying, and knew that unit testing not only increased software quality and allowed for a quicker development cycle, but crucially allowed for easier refactoring.

Eventually I did get onto a project where unit testing was not only permitted, but extreme thoroughness was encouraged. This was a big step forward - less bugs shipped, it was quicker and more fun to develop, and refactoring... took forever because we had to rewrite every single test every time we did it.

Wait what, wasn't the whole point of this to make refactoring quicker?!

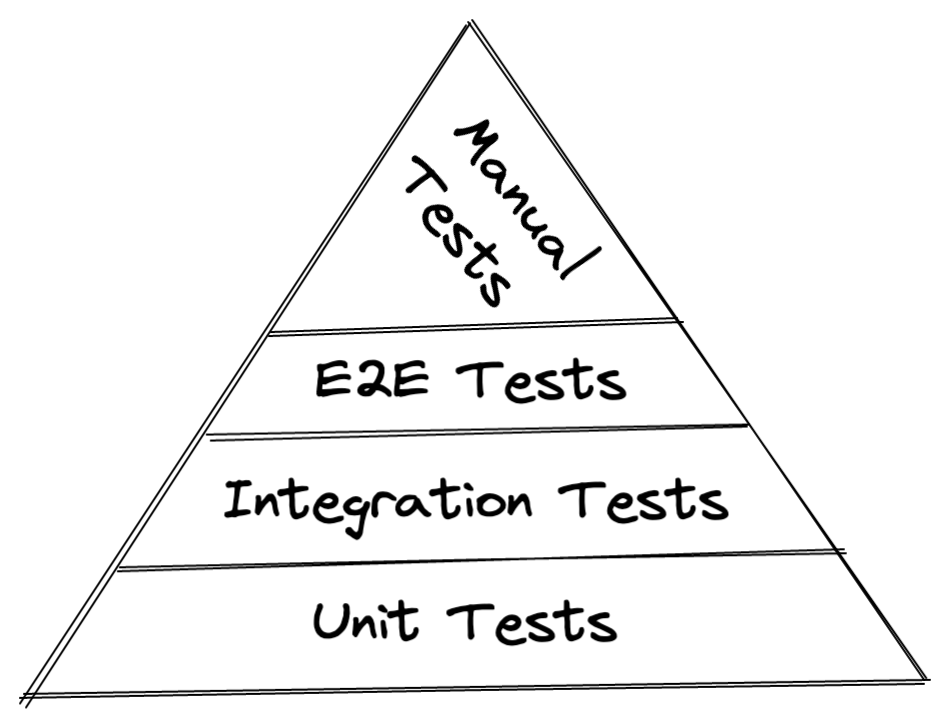

The elegance of the test pyramid

The way that I initially wrote tests was to focus the most effort on the lowest level (the unit), spending a bit less effort on tests that tie units together (integration) tests, less effort than that on testing a full running version of all components (end-to-end), etc - this is known as the Test Pyramid.

By testing at a low level, we should be able to reduce the total amount of tests we have to write - e.g. if we have a bubble sort implementation that takes a List interface as an argument, we can pass in a mocked List and write tests against that to ensure it’s making the list calls that we expect - then if we have a LinkedList, ArrayList, etc implementation, we can write unit tests make sure each implements the List interface properly, and be pretty sure that passing any one of the List implementations we have either now or in the future into our bubble sort will work, without having to specifically test bubbleSort() +ArrayList, or bubbleSort() + LinkedList.

It's a really elegant way to work - and work it does, because out of sheer luck we're writing our tests against an interface (List) that won't change - no matter how much we refactor or optimise the code under test, List will still be the same and our tests won't need to be rewritten.

The pyramids took decades to build

The problem with the test pyramid is that few of us actually get jobs writing beautiful List implementations, or other pieces of work in which the interfaces of our units don't change, and this causes the test pyramid approach to become much less useful.

Let's consider a more common scenario for my career - a web service endpoint that receives input from a client, makes a call to another web service to enhance it with more data, then saves that to a database.

Let's say the way we've divided up the code looks like this:

- A POST handler that handles the incoming HTTP call

- An API client that encapsulates the logic for calling the other web service

- A database repository that encapsulates the logic for saving the data in the database

If we were to unit test this according to the pyramid, maybe we’d end up with:

- Testing the POST handler against a mocked API client and database repository

- Testing our API client’s methods against a mocked http client

- Testing the database repository against a mocked ORM library

The unfortunate result of testing like this is that we'll have to rewrite our tests in many scenarios - if we:

- Make optimisations - e.g. if we collapse a few separate calls to our repository into a single custom query for performance, the app will act exactly the same but the methods that we call from our repository will be different, so we'll need to write a new test for the new method in the repository, and change the POST handler tests that mock it

- Refactor across multiple units - e.g. if we end up with an unintuitive interface around our repository class and we want to change it into a simpler set of methods then we'll need to change both the repository tests and the tests for anything that calls the repository.

- Restructure code - say we don’t like the repository pattern any more, and we want to move database access into commands, or have the handler access the database directly for simplicity. We’ll have to completely delete all the repository tests and the tests for anything that calls it and start over.

- Change any of our libraries (ORM, HTTP clients etc) - happily we'll only have to change the tests inside one of our units, but we will have to change all of them.

Write tests against what won't change

Generally, a software system will expose some kind of interface to allow it to be used - a web service might have a REST API, a local tool might have a command line, or a library might expose a set of public functions or classes. We tend to put extra effort into thinking through the design of these public interfaces because once they're exposed, making changes without breaking functionality for those who are using them is very difficult. This also applies to external components that our system is dependent on - for instance an external microservice will have its own API that seldom changes, and our database schema will change slowly because making non-backwards-compatible schema changes is difficult and risky.

When we write tests, we've inevitably got to choose an interface to write against - for a unit test, this is the interface of whatever unit is under test, usually the type signature of a function or the public methods on a class. These unit interfaces tend to change often in response to refactoring, optimisations, new requirements and so on, and they should be able to change quickly too, or we make any of these important improvements.

Instead what we want to do is write our tests against interfaces that seldom change, and thus we should target public, external interfaces, which are (generally) better designed and much slower to change than the non-exposed interfaces on units. For example, in our scenario above, we could treat our system as a black box and use its HTTP API as the interface that we use to test it (e.g. using supertest), use a mocked HTTP API for the web service it calls (e.g. using nock), and run it against a real database that we reset after each test.

Once we've got this kind of test set up, we can refactor and optimise to our heart's content within our black box, and not only will we not need to change our tests, the existing ones will be sufficient to test whatever change we've made. In addition, testing in this way catches a whole class of problems that we wouldn't normally think to test for - e.g. what if we accidentally remove the line of code that sets up JSON parsing on incoming requests? Easy to miss in unit testing, but it'll instantly break nearly every test written against the HTTP API.

The final, less concrete advantage of testing in this way is that thinking at a more zoomed-out level pushes you towards writing tests that are grounded in business logic rather than in implementation details - you're much more likely to see tests along the lines of "user details are not visible for non-admin users" and less "AuthMiddleware returns false when the required permissions is not a subset of the user permissions", which is much easier to understand.

Why not just write tests against the UI then?

If the idea is to write tests against interfaces and make refactoring as easy as possible, then it follows that for user-facing applications you should spin your entire app up and use the UI as the interface that you run your test against.

There is merit to think kind of thinking, but unfortunately user interfaces are not examples of interfaces that seldom change... rather they change often, because they're looking a bit out of date, or because a new designer was hired and wants to make their mark, or in order to resemble a competitor.

In addition, executing this kind of test can be difficult - if you're running an application that integrates with many external dependencies, it's difficult to set up an environment that has everything you need, and while technology for writing UI tests is continually improving, it's still much more difficult than writing tests against machine-readable interfaces.

I don't do labels

This approach is pretty close to writing "mostly integration" tests, or Kent C. Dodds' Test Trophy, but rather than trying to define "integration" or "unit", my preference is to focus on core principles:

- you should only have to change your tests when you change what your system does, not how it does it, and

- the easiest way to make that happen is to write them against the interfaces that your system presents to either other systems or to the user.

If you keep these in mind, you should be on solid ground regardless of nomenclature.

Further Reading

- https://www.tedinski.com/2019/03/19/testing-at-the-boundaries.html

- https://matklad.github.io/2021/05/31/how-to-test.html#test-driven-design-ossification

- https://techbeacon.com/app-dev-testing/no-1-unit-testing-best-practice-stop-doing-it

- https://rbcs-us.com/documents/Why-Most-Unit-Testing-is-Waste.pdf

- https://rbcs-us.com/documents/Segue.pdf

- https://kentcdodds.com/blog/the-testing-trophy-and-testing-classifications